spark

Definition

작성한 코드를 Spark에서 실행하는 노드입니다.

좌측 [Flow구성]노드 중 [spark]노드를 drag & drop 한 후 Property 항목을 입력합니다.

Property 패널의 [더보기+] 버튼을 누르면 입력가능한 전체 Property 항목을 볼 수 있습니다.

Set

[setting], [scheduler], [parameter] 설정은 [워크플로우] > [생성] > [기본구성]을 참고합니다.

property

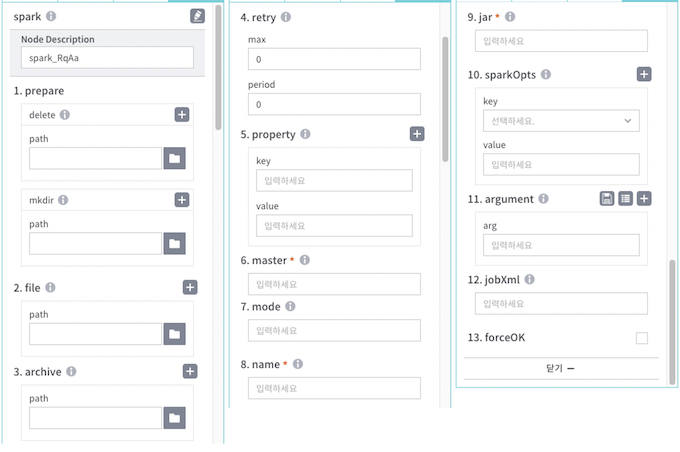

[Node Description] 작성 중인 노드명 입력

prepare : 노드 실행 결과를 HDFS에 저장하기 위해 파일삭제/폴더생성 작업 선 수행(워크플로우 반복실행 시 활용가능)

- delete : 노드 실행 전 삭제할 폴더/파일 경로

- mkdir : 노드 실행 전 생성할 폴더 경로

file : 노드에서 사용할 라이브러리 경로를 설정

archive : archive 경로를 설정

retry

- max : 실행 실패 시 재시도 횟수

- period : 재시도 주기(분 단위)

property : 실행에 사용할 property (key, value) 입력

master : 사용할 spark url 입력(DHP에서 생성한 클러스터 사용시 yarn 입력)

mode : spark 실행 모드(client, cluster) 입력(DHP에서 생성한 클러스터 사용시 client 입력)

deploy-mode 설명 client driver program을 로컬에서 실행 cluster driver program을 워커 모신 중 하나에서 실행 name : spark 애플리케이션명

jar : 실행할 python(py), jar 등의 경로 입력

sparkOpts : spark 실행시 사용할 옵션정보 입력(key, value)

key values 설명 --driver-cores 1 spark driver에서 사용할 core 수 지정 --driver-memory 1024m spark driver에서 사용할 memory 지정 --executor-cores 1 executor에서 사용할 core 수 지정 --executor-memory 1g executor에서 사용할 memory 지정 --num-executors 1 실행할 executor 수 지정 --queue default job을 제출할 yarn queue 이름 지정 --conf PROP=VALUE 임의의 spark 환경설정 속성값 지정

[Note] 아래 내용참고

https://spark.apache.org/docs/latest/running-on-yarn.html

- argument : python code 실행시 전달할 argument >>>>> 팝업 설명

- jobXml : jobXml 경로 입력. 잡에 전달할 프로퍼티를 별도 xml 로 작성하여 전달가능

- forceOK : 데이터 처리가 실패해도 정상으로 표시하고 종료

Example

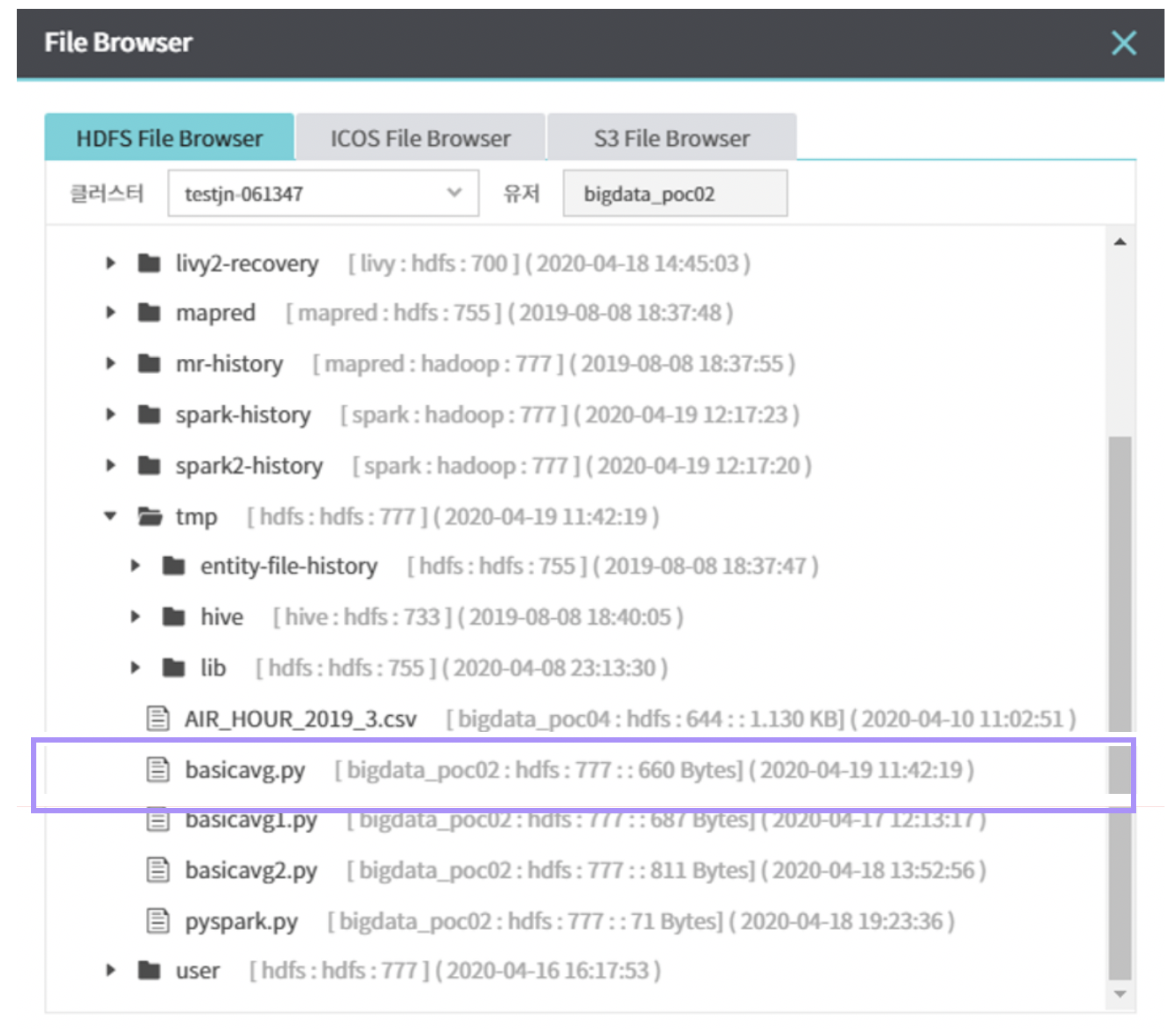

입력받은 숫자의 평균을 구하는 basicavg.py 파일을 spark에서 실행하는 예제입니다.

워크플로우 실행 클러스터에 basicavg.py 파일 적재

[Flow구성] > [spark] 노드를 drag & drop 한 후 setting 패널에서 실행클러스터 선택

property 패널에서 아래와 같이 입력

[2.file] 항목에 hdfs브라우저에서 basicavg.py이 저장된 경로 선택

그 외 항목을 아래와 같이 입력

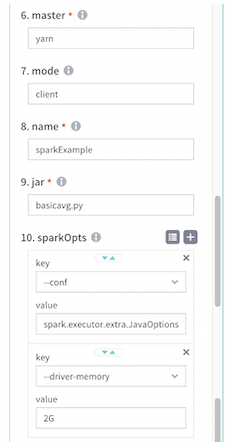

property value 6.master yarn 7.mode client 9.jar basicavg.py [10.sparkOpts] 항목은 아래와 같이 입력 (위 property [10. sparkOpts] 옵션 설명 항목 참고)

key value --driver-memory 2G --conf spark.executor.extraJavaOptions=-Xms512m -Xmx1024m